$ uname -r 2.9.0(0.318/5/3) I don't have the gcc or the g command on my path after I install cygwin. What packages do I need to install to get this command? In my case the problem solved by installing libpq-dev on my Ubuntu $ sudo apt install libpq-dev then try to removing the nodemodules and do npm install again. Note Please take a note that this is not a problem with the node modules, the problem is on the my machine, it doesn't meet the criteria of libpq. The Speech SDK supports Windows 10 and Windows Server 2016, or later versions. Earlier versions are not officially supported. It is possible to use parts of the Speech SDK with earlier versions of Windows, although it's not advised. Build a TensorFlow pip package from source and install it on Ubuntu Linux and macOS. While the instructions might work for other systems, it is only tested and supported for Ubuntu and macOS.

-->The Speech software development kit (SDK) exposes many of the Speech service capabilities, to empower you to develop speech-enabled applications. The Speech SDK is available in many programming languages and across all platforms.

| Programming language | Platform | SDK reference |

|---|---|---|

| C# 1 | Windows, Linux, macOS, Mono, Xamarin.iOS, Xamarin.Mac, Xamarin.Android, UWP, Unity | .NET SDK |

| C++ | Windows, Linux, macOS | C++ SDK |

| Go | Linux | Go SDK |

| Java 2 | Android, Windows, Linux, macOS | Java SDK |

| JavaScript | Browser, Node.js | JavaScript SDK |

| Objective-C / Swift | iOS, macOS | Objective-C SDK |

| Python | Windows, Linux, macOS | Python SDK |

1 The .NET Speech SDK is based on .NET Standard 2.0, thus it supports many platforms. For more information, see .NET implementation support .

2 The Java Speech SDK is also available as part of the Speech Devices SDK.

Scenario capabilities

The Speech SDK exposes many features from the Speech service, but not all of them. The capabilities of the Speech SDK are often associated with scenarios. The Speech SDK is ideal for both real-time and non-real-time scenarios, using local devices, files, Azure blob storage, and even input and output streams. When a scenario is not achievable with the Speech SDK, look for a REST API alternative.

Speech-to-text

Speech-to-text (also known as speech recognition) transcribes audio streams to text that your applications, tools, or devices can consume or display. Use speech-to-text with Language Understanding (LUIS) to derive user intents from transcribed speech and act on voice commands. Use Speech Translation to translate speech input to a different language with a single call. For more information, see Speech-to-text basics.

Speech-Recognition (SR), Phrase List, Intent, Translation, and On-premises containers are available on the following platforms:

- C++/Windows & Linux & macOS

- C# (Framework & .NET Core)/Windows & UWP & Unity & Xamarin & Linux & macOS

- Java (Jre and Android)

- JavaScript (Brower and NodeJS)

- Python

- Swift

- Objective-C

- Go (SR only)

Text-to-speech

Text-to-speech (also known as speech synthesis) converts text into human-like synthesized speech. The input text is either string literals or using the Speech Synthesis Markup Language (SSML). For more information on standard or neural voices, see Text-to-speech language and voice support.

Text-to-speech (TTS) is available on the following platforms:

- C++/Windows & Linux

- C#/Windows & UWP & Unity

- Java (Jre and Android)

- Python

- Swift

- Objective-C

- TTS REST API can be used in every other situation.

Voice assistants

Voice assistants using the Speech SDK enable you to create natural, human-like conversational interfaces for your applications and experiences. The Speech SDK provides fast, reliable interaction that includes speech-to-text, text-to-speech, and conversational data on a single connection. Your implementation can use the Bot Framework's Direct Line Speech channel or the integrated Custom Commands service for task completion. Additionally, voice assistants can use custom voices created in the Custom Voice Portal to add a unique voice output experience.

Voice assistant support is available on the following platforms:

- C++/Windows & Linux & macOS

- C#/Windows

- Java/Windows & Linux & macOS & Android (Speech Devices SDK)

- Go

Keyword recognition

The concept of keyword recognition is supported in the Speech SDK. Keyword recognition is the act of identifying a keyword in speech, followed by an action upon hearing the keyword. For example, 'Hey Cortana' would activate the Cortana assistant.

Keyword recognition is available on the following platforms:

- C++/Windows & Linux

- C#/Windows & Linux

- Python/Windows & Linux

- Java/Windows & Linux & Android (Speech Devices SDK)

- Keyword recognition functionality might work with any microphone type, official keyword recognition support, however, is currently limited to the microphone arrays found in the Azure Kinect DK hardware or the Speech Devices SDK

Meeting scenarios

The Speech SDK is perfect for transcribing meeting scenarios, whether from a single device or multi-device conversation.

Conversation Transcription

Conversation Transcription enables real-time (and asynchronous) speech recognition, speaker identification, and sentence attribution to each speaker (also known as diarization). It's perfect for transcribing in-person meetings with the ability to distinguish speakers.

Conversation Transcription is available on the following platforms:

- C++/Windows & Linux

- C# (Framework & .NET Core)/Windows & UWP & Linux

- Java/Windows & Linux & Android (Speech Devices SDK)

Multi-device Conversation

With Multi-device Conversation, connect multiple devices or clients in a conversation to send speech-based or text-based messages, with easy support for transcription and translation.

Multi-device Conversation is available on the following platforms:

- C++/Windows

- C# (Framework & .NET Core)/Windows

Custom / agent scenarios

The Speech SDK can be used for transcribing call center scenarios, where telephony data is generated.

Call Center Transcription

Call Center Transcription is common scenario for speech-to-text for transcribing large volumes of telephony data that may come from various systems, such as Interactive Voice Response (IVR). The latest speech recognition models from the Speech service excel at transcribing this telephony data, even in cases when the data is difficult for a human to understand.

Call Center Transcription is available through the Batch Speech Service via its REST API and can be used in any situation.

Codec compressed audio input

Several of the Speech SDK programming languages support codec compressed audio input streams. For more information, see use compressed audio input formats .

Codec compressed audio input is available on the following platforms:

- C++/Linux

- C#/Linux

- Java/Linux, Android, and iOS

REST API

While the Speech SDK covers many feature capabilities of the Speech Service, for some scenarios you might want to use the REST API.

Batch transcription

Batch transcription enables asynchronous speech-to-text transcription of large volumes of data. Batch transcription is only possible from the REST API. In addition to converting speech audio to text, batch speech-to-text also allows for diarization and sentiment-analysis.

Customization

The Speech Service delivers great functionality with its default models across speech-to-text, text-to-speech, and speech-translation. Sometimes you may want to increase the baseline performance to work even better with your unique use case. The Speech Service has a variety of no-code customization tools that make it easy, and allow you to create a competitive advantage with custom models based on your own data. These models will only be available to you and your organization.

Custom Speech-to-text

When using speech-to-text for recognition and transcription in a unique environment, you can create and train custom acoustic, language, and pronunciation models to address ambient noise or industry-specific vocabulary. The creation and management of no-code Custom Speech models is available through the Custom Speech Portal. Once the Custom Speech model is published, it can be consumed by the Speech SDK.

Custom Text-to-speech

Custom text-to-speech, also known as Custom Voice is a set of online tools that allow you to create a recognizable, one-of-a-kind voice for your brand. The creation and management of no-code Custom Voice models is available through the Custom Voice Portal. Once the Custom Voice model is published, it can be consumed by the Speech SDK.

Get the Speech SDK

The Speech SDK supports Windows 10 and Windows Server 2016, or later versions. Earlier versions are not officially supported. It is possible to use parts of the Speech SDK with earlier versions of Windows, although it's not advised.

System requirements

The Speech SDK on Windows requires the Microsoft Visual C++ Redistributable for Visual Studio 2019 on the system.

C#

The .NET Speech SDK is available as a NuGet package and implements .NET Standard 2.0, for more information, see Microsoft.CognitiveServices.Speech .

C# NuGet Package

The .NET Speech SDK can be installed from the .NET Core CLI with the following dotnet add command.

The .NET Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

For microphone input, the Media Foundation libraries must be installed. These libraries are part of Windows 10 and Windows Server 2016. It's possible to use the Speech SDK without these libraries, as long as a microphone isn't used as the audio input device.

The required Speech SDK files can be deployed in the same directory as your application. This way your application can directly access the libraries. Make sure you select the correct version (x86/x64) that matches your application.

| Name | Function |

|---|---|

Microsoft.CognitiveServices.Speech.core.dll | Core SDK, required for native and managed deployment |

Microsoft.CognitiveServices.Speech.csharp.dll | Required for managed deployment |

Note

Starting with the release 1.3.0 the file Microsoft.CognitiveServices.Speech.csharp.bindings.dll (shipped in previous releases) isn't needed anymore. The functionality is now integrated in the core SDK.

Important

For the Windows Forms App (.NET Framework) C# project, make sure the libraries are included in your project's deployment settings. You can check this under Properties -> Publish Section. Click the Application Files button and find corresponding libraries from the scroll down list. Make sure the value is set to Included. Visual Studio will include the file when project is published/deployed.

C++

The C++ Speech SDK is available on Windows, Linux, and macOS. For more information, see Microsoft.CognitiveServices.Speech .

C++ NuGet package

The C++ Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

Python

The Python Speech SDK is available as a Python Package Index (PyPI) module, for more information, see azure-cognitiveservices-speech . The Python Speech SDK is compatible with Windows, Linux, and macOS.

Tip

If you are on macOS, you may need to run the following command to get the pip command above to work:

Additional resources

Java

The Java SDK for Android is packaged as an AAR (Android Library) , which includes the necessary libraries and required Android permissions. It's hosted in a Maven repository at https://csspeechstorage.blob.core.windows.net/maven/ as package com.microsoft.cognitiveservices.speech:client-sdk:1.15.0.

To consume the package from your Android Studio project, make the following changes:

- In the project-level build.gradle file, add the following to the

repositoriessection:

- In the module-level build.gradle file, add the following to the

dependenciessection:

The Java SDK is also part of the Speech Devices SDK.

Additional resources

The Speech SDK only supports Ubuntu 16.04/18.04/20.04, Debian 9/10, Red Hat Enterprise Linux (RHEL) 7/8, and CentOS 7/8 on the following target architectures when used with Linux:

- x86 (Debian/Ubuntu), x64, ARM32 (Debian/Ubuntu), and ARM64 (Debian/Ubuntu) for C++ development

- x64, ARM32 (Debian/Ubuntu), and ARM64 (Debian/Ubuntu) for Java

- x64, ARM32 (Debian/Ubuntu), and ARM64 (Debian/Ubuntu) for .NET Core

- x64 for Python

Important

For C# on Linux ARM64, the .NET Core 3.x (dotnet-sdk-3.x package) is required.

Note

To use the Speech SDK in Alpine Linux, create a Debian chroot environment as documented in the Alpine Linux Wiki on running glibc programs, and then follow Debian instructions here.

System requirements

For a native application, the Speech SDK relies on libMicrosoft.CognitiveServices.Speech.core.so. Make sure the target architecture (x86, x64) matches the application. Depending on the Linux version, additional dependencies may be required.

- The shared libraries of the GNU C library (including the POSIX Threads Programming library,

libpthreads) - The OpenSSL library (

libssl.so.1.0.0orlibssl.so.1.0.2) - The shared library for ALSA applications (

libasound.so.2)

Note

If libssl1.0.x is not available, install libssl1.1 instead.

Note

If libssl1.0.x is not available, install libssl1.1 instead.

Important

- On RHEL/CentOS 7, follow the instructions on how to configure RHEL/CentOS 7 for Speech SDK.

- On RHEL/CentOS 8, follow the instructions on how to configure OpenSSL for Linux.

C#

The .NET Speech SDK is available as a NuGet package and implements .NET Standard 2.0, for more information, see Microsoft.CognitiveServices.Speech .

C# NuGet Package

The .NET Speech SDK can be installed from the .NET Core CLI with the following dotnet add command.

The .NET Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

C++

The C++ Speech SDK is available on Windows, Linux, and macOS. For more information, see Microsoft.CognitiveServices.Speech .

C++ NuGet package

The C++ Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

Python

The Python Speech SDK is available as a Python Package Index (PyPI) module, for more information, see azure-cognitiveservices-speech . The Python Speech SDK is compatible with Windows, Linux, and macOS.

Tip

If you are on macOS, you may need to run the following command to get the pip command above to work:

Additional resources

Java

The Java SDK for Android is packaged as an AAR (Android Library) , which includes the necessary libraries and required Android permissions. It's hosted in a Maven repository at https://csspeechstorage.blob.core.windows.net/maven/ as package com.microsoft.cognitiveservices.speech:client-sdk:1.15.0.

To consume the package from your Android Studio project, make the following changes:

- In the project-level build.gradle file, add the following to the

repositoriessection:

- In the module-level build.gradle file, add the following to the

dependenciessection:

The Java SDK is also part of the Speech Devices SDK.

Additional resources

When developing for iOS, there are two Speech SDKs available. The Objective-C Speech SDK is available natively as an iOS CocoaPod package. Alternatively, the .NET Speech SDK could be used with Xamarin.iOS as it implements .NET Standard 2.0.

Tip

For details using the Objective-C Speech SDK with Swift, see Importing Objective-C into Swift .

System requirements

- A macOS version 10.3 or later

- Target iOS 9.3 or later

The iOS CocoaPod package is available for download and use with the Xcode 9.4.1 (or later) integrated development environment (IDE). First, download the binary CocoaPod . Extract the pod in the same directory for its intended use, create a Podfile and list the pod as a target.

Xamarin.iOS exposes the complete iOS SDK for .NET developers. Build fully native iOS apps using C# or F# in Visual Studio. For more information, see Xamarin.iOS .

The .NET Speech SDK is available as a NuGet package and implements .NET Standard 2.0, for more information, see Microsoft.CognitiveServices.Speech .

C# NuGet Package

The .NET Speech SDK can be installed from the .NET Core CLI with the following dotnet add command.

The .NET Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

Additional resources

When developing for macOS, there are three Speech SDKs available.

- The Objective-C Speech SDK is available natively as a CocoaPod package

- The .NET Speech SDK could be used with Xamarin.Mac as it implements .NET Standard 2.0

- The Python Speech SDK is available as a PyPI module

Tip

For details using the Objective-C Speech SDK with Swift, see Importing Objective-C into Swift .

System requirements

- A macOS version 10.13 or later

The macOS CocoaPod package is available for download and use with the Xcode 9.4.1 (or later) integrated development environment (IDE). First, download the binary CocoaPod . Extract the pod in the same directory for its intended use, create a Podfile and list the pod as a target.

Xamarin.Mac exposes the complete macOS SDK for .NET developers to build native Mac applications using C#. For more information, see Xamarin.Mac .

The .NET Speech SDK is available as a NuGet package and implements .NET Standard 2.0, for more information, see Microsoft.CognitiveServices.Speech .

C# NuGet Package

The .NET Speech SDK can be installed from the .NET Core CLI with the following dotnet add command.

The .NET Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

The Python Speech SDK is available as a Python Package Index (PyPI) module, for more information, see azure-cognitiveservices-speech . The Python Speech SDK is compatible with Windows, Linux, and macOS.

Tip

If you are on macOS, you may need to run the following command to get the pip command above to work:

Additional resources

Additional resources

When developing for Android, there are two Speech SDKs available. The Java Speech SDK is available natively as an Android package, or the .NET Speech SDK could be used with Xamarin.Android as it implements .NET Standard 2.0.

The Java SDK for Android is packaged as an AAR (Android Library) , which includes the necessary libraries and required Android permissions. It's hosted in a Maven repository at https://csspeechstorage.blob.core.windows.net/maven/ as package com.microsoft.cognitiveservices.speech:client-sdk:1.15.0.

To consume the package from your Android Studio project, make the following changes:

- In the project-level build.gradle file, add the following to the

repositoriessection:

- In the module-level build.gradle file, add the following to the

dependenciessection:

The Java SDK is also part of the Speech Devices SDK.

Additional resources

Xamarin.Android exposes the complete Android SDK for .NET developers. Build fully native Android apps using C# or F# in Visual Studio. For more information, see Xamarin.Android

The .NET Speech SDK is available as a NuGet package and implements .NET Standard 2.0, for more information, see Microsoft.CognitiveServices.Speech .

C# NuGet Package

The .NET Speech SDK can be installed from the .NET Core CLI with the following dotnet add command.

The .NET Speech SDK can be installed from the Package Manager with the following Install-Package command.

Additional resources

The Speech SDK for JavaScript is available as an npm package, see microsoft-cognitiveservices-speech-sdk and its companion GitHub repository cognitive-services-speech-sdk-js .

Tip

Although the Speech SDK for JavaScript is available as an npm package, thus both Node.js and client web browsers can consume it - consider the various architectural implications of each environment. For example, the document object model (DOM) is not available for server-side applications just as the file system is not available to client-side applications.

Node.js Package Manager (NPM)

To install the Speech SDK for JavaScript, run the following npm install command below.

For more information, see the Node.js Speech SDK quickstart .

The Speech SDK for JavaScript is available as an npm package, see microsoft-cognitiveservices-speech-sdk and its companion GitHub repository cognitive-services-speech-sdk-js .

Tip

Although the Speech SDK for JavaScript is available as an npm package, thus both client web browsers and Node.js can consume it - consider the various architectural implications of each environment. For example, the document object model (DOM) is not available for server-side applications just as the file system is not available to client-side applications.

Node.js Package Manager (NPM)

To install the Speech SDK for JavaScript, run the following npm install command below.

HTML script tag

Alternatively, you could directly include a <script> tag in the HTMLs <head> element, relying on the JSDelivr NPM syndicate .

For more information, see the Web Browser Speech SDK quickstart .

Important

By downloading any of the Azure Cognitive Services Speech SDKs, you acknowledge its license. For more information, see:

Sample source code

The Speech SDK team actively maintains a large set of examples in an open-source repository. For the sample source code repository, visit the Microsoft Cognitive Services Speech SDK on GitHub . There are samples for C#, C++, Java, Python, Objective-C, Swift, JavaScript, UWP, Unity, and Xamarin.

Next steps

Supported Python versions¶

Scrapy requires Python 3.6+, either the CPython implementation (default) orthe PyPy 7.2.0+ implementation (see Alternate Implementations).

Installing Scrapy¶

If you’re using Anaconda or Miniconda, you can install the package fromthe conda-forge channel, which has up-to-date packages for Linux, Windowsand macOS.

To install Scrapy using conda, run:

Alternatively, if you’re already familiar with installation of Python packages,you can install Scrapy and its dependencies from PyPI with:

Note that sometimes this may require solving compilation issues for some Scrapydependencies depending on your operating system, so be sure to check the.

We strongly recommend that you install Scrapy in ,to avoid conflicting with your system packages.

For more detailed and platform specifics instructions, as well astroubleshooting information, read on.

Things that are good to know¶

Scrapy is written in pure Python and depends on a few key Python packages (among others):

lxml, an efficient XML and HTML parser

parsel, an HTML/XML data extraction library written on top of lxml,

w3lib, a multi-purpose helper for dealing with URLs and web page encodings

twisted, an asynchronous networking framework

cryptography and pyOpenSSL, to deal with various network-level security needs

The minimal versions which Scrapy is tested against are:

Twisted 14.0

lxml 3.4

pyOpenSSL 0.14

Scrapy may work with older versions of these packagesbut it is not guaranteed it will continue workingbecause it’s not being tested against them.

Install Xcode On Ubuntu

Some of these packages themselves depends on non-Python packagesthat might require additional installation steps depending on your platform.Please check .

In case of any trouble related to these dependencies,please refer to their respective installation instructions:

Using a virtual environment (recommended)¶

TL;DR: We recommend installing Scrapy inside a virtual environmenton all platforms.

Python packages can be installed either globally (a.k.a system wide),or in user-space. We do not recommend installing Scrapy system wide.

Instead, we recommend that you install Scrapy within a so-called“virtual environment” (venv).Virtual environments allow you to not conflict with already-installed Pythonsystem packages (which could break some of your system tools and scripts),and still install packages normally with pip (without sudo and the likes).

See Virtual Environments and Packages on how to create your virtual environment.

Once you have created a virtual environment, you can install Scrapy inside it with pip,just like any other Python package.(See below for non-Python dependencies that you may need to install beforehand).

Platform specific installation notes¶

Windows¶

Though it’s possible to install Scrapy on Windows using pip, we recommend youto install Anaconda or Miniconda and use the package from theconda-forge channel, which will avoid most installation issues.

Once you’ve installed Anaconda or Miniconda, install Scrapy with:

Ubuntu 14.04 or above¶

Scrapy is currently tested with recent-enough versions of lxml,twisted and pyOpenSSL, and is compatible with recent Ubuntu distributions.But it should support older versions of Ubuntu too, like Ubuntu 14.04,albeit with potential issues with TLS connections.

Don’t use the python-scrapy package provided by Ubuntu, they aretypically too old and slow to catch up with latest Scrapy.

To install Scrapy on Ubuntu (or Ubuntu-based) systems, you need to installthese dependencies:

python3-dev,zlib1g-dev,libxml2-devandlibxslt1-devare required forlxmllibssl-devandlibffi-devare required forcryptography

Inside a ,you can install Scrapy with pip after that:

Note

The same non-Python dependencies can be used to install Scrapy in DebianJessie (8.0) and above.

macOS¶

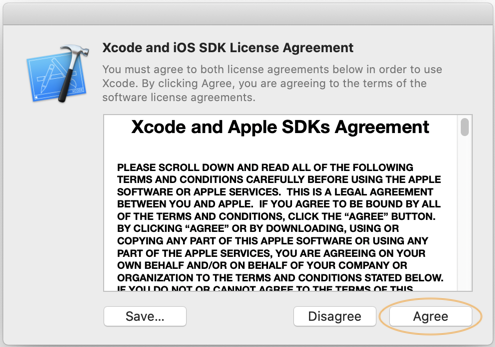

Building Scrapy’s dependencies requires the presence of a C compiler anddevelopment headers. On macOS this is typically provided by Apple’s Xcodedevelopment tools. To install the Xcode command line tools open a terminalwindow and run:

There’s a known issue thatprevents pip from updating system packages. This has to be addressed tosuccessfully install Scrapy and its dependencies. Here are some proposedsolutions:

How To Install Xcode On Ubuntu

(Recommended)Don’t use system python, install a new, updated versionthat doesn’t conflict with the rest of your system. Here’s how to do it usingthe homebrew package manager:

Install homebrew following the instructions in https://brew.sh/

Update your

PATHvariable to state that homebrew packages should beused before system packages (Change.bashrcto.zshrcaccordantlyif you’re using zsh as default shell):Reload

.bashrcto ensure the changes have taken place:Install python:

Latest versions of python have

pipbundled with them so you won’t needto install it separately. If this is not the case, upgrade python:

(Optional).

This method is a workaround for the above macOS issue, but it’s an overallgood practice for managing dependencies and can complement the first method.

After any of these workarounds you should be able to install Scrapy:

PyPy¶

We recommend using the latest PyPy version. The version tested is 5.9.0.For PyPy3, only Linux installation was tested.

Most Scrapy dependencies now have binary wheels for CPython, but not for PyPy.This means that these dependencies will be built during installation.On macOS, you are likely to face an issue with building Cryptography dependency,solution to this problem is describedhere,that is to brewinstallopenssl and then export the flags that this commandrecommends (only needed when installing Scrapy). Installing on Linux has no specialissues besides installing build dependencies.Installing Scrapy with PyPy on Windows is not tested.

You can check that Scrapy is installed correctly by running scrapybench.If this command gives errors such asTypeError:...got2unexpectedkeywordarguments, this meansthat setuptools was unable to pick up one PyPy-specific dependency.To fix this issue, run pipinstall'PyPyDispatcher>=2.1.0'.

Troubleshooting¶

AttributeError: ‘module’ object has no attribute ‘OP_NO_TLSv1_1’¶

After you install or upgrade Scrapy, Twisted or pyOpenSSL, you may get anexception with the following traceback:

The reason you get this exception is that your system or virtual environmenthas a version of pyOpenSSL that your version of Twisted does not support.

To install a version of pyOpenSSL that your version of Twisted supports,reinstall Twisted with the tls extra option:

How To Install Xcode On Ubuntu

For details, see Issue #2473.